The COHRINT Lab brings together expertise in machine learning, sensor fusion, control and planning algorithms for autonomous mobile robot systems, with a special emphasis on aerospace applications. Our research focuses on intelligent human-robot interaction and scalable distributed robot-robot reasoning strategies for solving dynamic decision-making problems under uncertainty. Check out this video to learn more!

Software developed by our lab is available on GitHub.

Active and Recent Projects

INPASS

COLDTech (Concepts for Ocean worlds Life Detection Technology)

Sponsored by: National Aeronautics and Space Administration (NASA)

PI: Jay McMahon (CU Boulder); co-PI: Nisar Ahmed (CU Boulder) and Morteza Lahijanian (CU Boulder)

Future planetary exploration missions on the surface of distant bodies such as Europa or Enceladus can’t rely on human-in-the-loop operations due to time delays, dynamic environments, limited mission lifetimes, as well as the many unknown unknowns inherent in the exploration of such environments. Thus, our robotic explorers must be capable of autonomous operations to ensure continued operations and to try to maximize the amount and quality of the scientific data gathered from each mission. To advance our technology toward this goal, in this project, we are developing a system to maximize the science obtained by a robotic lander and delivered to scientists on Earth with minimal asynchronous human interaction. For further details on this project, please refer to the following papers [arXiv 2023, ICRA 2023, IEEE Aerospace 2022, IEEE Aerospace 2023].

CAMP (Collaborative Analyst-Machine Perception)

Sponsored by: United States Space Force

PI: Nisar Ahmed (CU Boulder); co-PI: Danielle Szafir (CU Boulder)

As automated ingestion and processing of high volume satellite remote sensing data continues to expand, human analysts and operators will always need to be kept in the loop. Sophisticated machine learning algorithms for automated event detection, tracking and data fusion do not perform perfectly in all situations. Domain users are often unaware of these limitations, and are currently unable to interact directly with these algorithms to mitigate these issues. New technologies that better balance between automation and human oversight are needed to exploit the best of both worlds at various levels of the information pipeline. This research will combine probabilistic machine learning, data fusion, and human input processing algorithms into a unified software suite that enables bilateral information exchange between automation and analysts. This research is being conducted in collaboration with Lockheed Martin Space Systems and the TAP Lab. For further details on this project, please refer to the following papers [AIAA 2024, AIAA 2024]

RINAO (Rescuer Interface for iNtuitive Aircraft Operation)

Sponsored by: NSF Center for Autonomous Air Mobility and Sensing (CAAMS)

RINAO aims to implement and validate an architecture for a novel method of autonomous sUAS operator command & control for use in public safety emergencies, with an initial focus on search & rescue. The architecture will implement autonomous decision-making algorithms and human-autonomy interaction methods (including sketch-based interaction) recently validated in simulation and human subject studies. The result will be a compact and fieldable full-scale system, with a supporting software reference implementation that can be readily adapted to other collaborative human-autonomy information gathering sUAS missions. The realized system will be tested by first responders of the Boulder Emergency Squad (BES) in relevant environments, such as the Boulder County Regional Fire Training Center.

Previous Projects

CAML (Competency-Aware Machine Learning)

Sponsored by: Defense Advanced Research Projects Agency (DARPA)

PI: Rebecca Russel (Draper); Nisar Ahmed (CU Boulder); Eric Frew (CU Boulder); Ufuk Topcu (UT Austin); Zhe Xu (ASU)

UPDATE IN PROGRESS

For further details, please refer to the following papers: [ICRA 2023, HRI 2023, IROS 2022, IROS 2022, ICRA 2022, AIAA 2022, NeurIPS 2020]

Harnessing Human Perception in UAS via Bayesian Active Sensing

Sponsored by: NSF IUCRC Center for Unmanned Aerial Systems (C-UAS)

UAS operators and users can play valuable roles as “human sensors” that contribute information beyond the reach of vehicle sensors. For instance, operators in search missions can provide “soft data” to narrow down possible survivor locations using semantic natural language observations (e.g. “Nothing is around the lake”; “Something is moving towards the fence”), or provide estimates of physical quantities (e.g. masses/sizes of obstacles, distances from landmarks) to help autonomous vehicles better understand search areas and improve decision making. This research focuses on the development of intelligent operator-UAS interfaces for “active human sensing”, so that autonomous UAS can decide how and when to query operators for soft data to expedite online decision making, based on dynamic models of the world and the operator.

Robust GPS-Denied Cooperative Localization Using Distributed Data Fusion

Sponsored by: U.S. Army Space and Missile Defense Command

This research will develop new decentralized data fusion (DDF) algorithms for cooperative positioning. Accurate position and navigation information is crucial to mission success for mobile elements, especially in denied and contested environments. To ensure robustness to disrupted communications or GPS/satellite reception, novel sensor fusion algorithms are needed to assure cooperative positioning. This allows elements to treat each other as beacons on a “moving map”, whose uncertain locations are mutually estimated via opportunistic absolute/relative position measurements and then shared with each other. Key technical challenges for such algorithms are to ensure scalability, statistical correctness, and awareness of potential signal interference, while also enabling flexible information integration with minimal computing and communication overhead.

“Machine Self-Confidence” for Calibrating Trust in Autonomy

Sponsored by: NSF IUCRC Center for Unmanned Aerial Systems (C-UAS), and Northrop-Grumman Aerospace Systems

Given the growing complexity and sophistication of increasingly autonomous systems, it is important to allow non-expert users to understand the actual capabilities of autonomous systems so that they can be tasked appropriately. In turn, this must engender trust in the autonomy and confidence in the operator. Competency information could be delivered as explanations of internal decision processes made by the autonomy, but this is often difficult to interpret by non-experts. Instead, we advocate that these insights be conveyed by a shorthand metric of the autonomy’s “self-confidence” in executing the tasks it has been assigned. Formulated correctly, this information should enable a competent user to task the autonomy with enhanced confidence, resulting in both increased system performance and reduced operator workload. Incorrectly constituted or inflated self-confidence can instead lead to inappropriate use of autonomy, or mistrust that leads to disuse. This project will develop specific metrics for intelligent physical system self-confidence, guided by autonomous aerospace robotics applications involving complex decision-making under uncertainty.

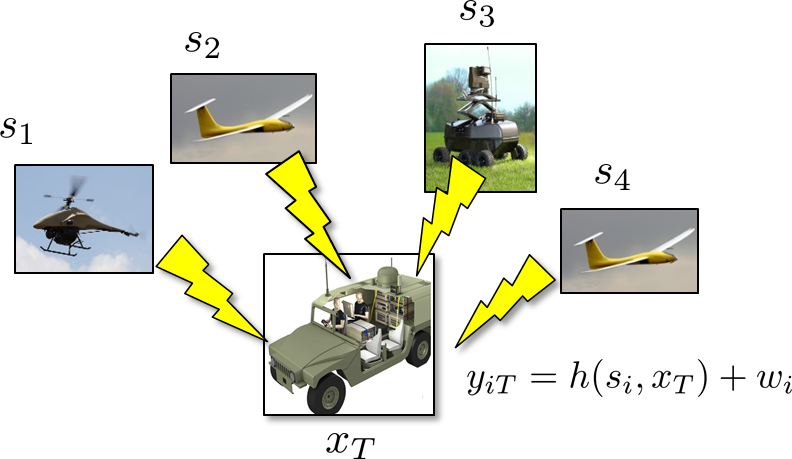

Scalable Cooperative Tracking of RF Ground Targets

Sponsored by: NSF IUCRC Center for Unmanned Aerial Systems (C-UAS)

This work develops a new approach to decentralized sensor fusion and trajectory optimization to enable multiple networked UAS assets to cooperatively localize moving RF signal sources on the ground in the presence of uncertainties in ownship states and sensing models. Our approach ties together model predictive planning with the recently developed idea of factorized distributed data fusion (FDDF), which allows each tracker vehicle to ignore state uncertainties for other vehicles and absorb new target state and local model information without sacrificing overall estimation performance. This approach will significantly reduce communication and computational overhead, and allow vehicles to maintain statistical consistency as well as accurately predict expected local information gains to efficiently devise receding horizon tracking trajectories, even in large ad hoc networks.

Learning for Coordinated Autonomous Robot-Human Teaming in Space Exploration

Sponsored by: NASA Space Technology Research Fellowship Program

In human-robot teams, the effects of interactions at a variety of distances has not been well-studied. We argue that teammate interactions over multiple distances forms an important part of many human-robot teaming applications. New modeling and learning approaches are needed to build an accurate and reliable understanding of actual human-robot operations at multiple time, space, and information scales, thus realizing the full potential of teaming in complex future applications for space exploration.

TALAF (Tactical Autonomy Learning Agent Framework)

Sponsored by: Air Force Research Laboratory; in partnership with Orbit Logic, Inc.

This research developed a novel software and learning architecture for optimally adapting the behaviors of autonomous agents engaging in air combat engagement simulations. The key innovation is the development of a Gaussian Process Bayes Optimization (GP/BO) learning engine that evaluates metrics from simulation runs while intelligently modifying tunable agent parameters to seek optimum outcomes in complex multi-dimensional trade spaces. The research topic targeted more effective training of pilots at lower costs, but the onboard agent-based software utilized by the training function of the architecture also has application toward both unmanned combat aircraft and onboard advising capability, which can allow pilots to be more dominant in engagements.

Event-Triggered Cooperative Localization

Collaborators: University of California San Diego and SPAWAR

This research focuses on a novel cooperative localization algorithm for a team of robotic agents to estimate the state of the network via local communications. Exploiting an event-based paradigm, agents only send measurements to their neighbors when the expected benefit to employ this information is high. Because agents know the event-triggering condition for measurements to be sent, the lack of a measurement is also informative and fused into state estimates. The benefit of this implicit messaging approach is that it can reproduce nearly optimal localization results, while using significantly less power and bandwidth for communication.

Audio Localization and Perception for Robotic Search

Sponsored by: CU Boulder CEAS Discovery Learning Apprenticeship Program

This undergraduate research project looks at techniques for incorporating sound-based perception and localization into autonomous mobile robotic search problems in human environments. Although nearly all robotic exteroperception algorithms rely heavily on active/passive vision (e.g. lidar, cameras), most autonomous robots nowadays are effectively “deaf”, i.e. they cannot incorporate ambient sound information from their environments into higher-level reasoning and decision making. Unlike active sonar, perception based on ambient sound is passive in nature and must be able to handle a wide spectrum of stimuli. The goal of this project is to develop software and hardware capabilities for enabling an autonomous mobile robot to augment other sensory sources (onboard visual target detection, human inputs, etc.) with onboard audio detection and localization for a multi-target search and tracking task in a cluttered indoor environment.

You must be logged in to post a comment.